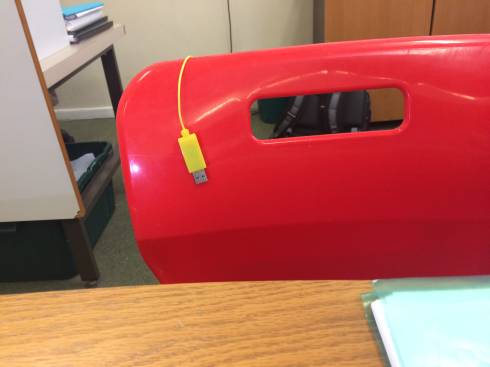

Playing with the Pixy2, the colour saturation of the images produced by the camera seems poor and as a result the colour discrimination in colour_connected_components and pan_tilt_demo is disappointing unless you can assure a completely neutral background. I’ve attached 2 pics, one screen-grabbed from PixyMon (under Linux Mint) and the other the same scene taken with my iPhone (5S). I would expect the red chair and the yellow USB lead to be easily distinguished from anything else. Comparing the hsl values of pixels between the two images, the iPhone image has roughly twice the saturation.

There was a similar thread in 2014 relating to Pixy1 but the response only had suggestions to do with colour balance and exposure, neither of which seem to address the problem.

The response referred to pixydevice/libpixy/camera.cpp on github so I went searching for its counterpart for pixy2. I found pixy2/src/device/libpixy_m4/src/camera.cpp which looks very similar but does have an extra function cam_setSaturation - it would seem just what I need.

This doesn’t seem to be exposed by PixyMon. So, my question:

- Will it do me any good?

- How do I tweak it?